The production of real-time Cinematics is a non-linear process which often sees artists from different disciplines having to start working on cut-scenes for a story that hasn’t been finalised yet.

When I joined Revolution Software as Lead Cinematic Artist on Beyond a Steel Sky (from now on BASS), the game was already in an advanced production stage and the deadline for cinematic content was nearly 6 months down the line, but most of the dialogues and a few of the game missions were not locked yet.

The challenge ahead was to keep the Cinematic team running without major hiccups and bottlenecks, while responding with flexibility and agility to story and dialogues changes.

Below I will dissect the processes that allowed a small team of 12 artists to deliver nearly 120 different cinematic sequences in the span of 7 months, while working from home in the middle of the pandemic.

Collaborative Workflows

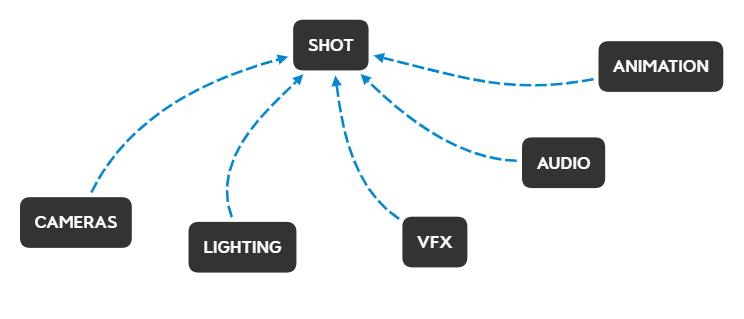

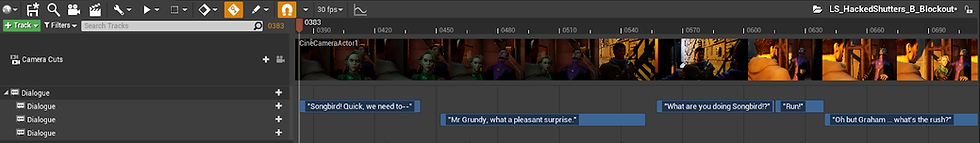

Setting a Collaborative Workflow from the block-out stage saved us a great deal of time and budget, as every department was able to add their contributions (Audio, Vfx, Animations, Lighting, etc) to the timelines as soon as they were ready, without having to wait on someone else.

I have talked about Collaborative Workflows in a previous article.

If you want to read more about it, you can find it here.

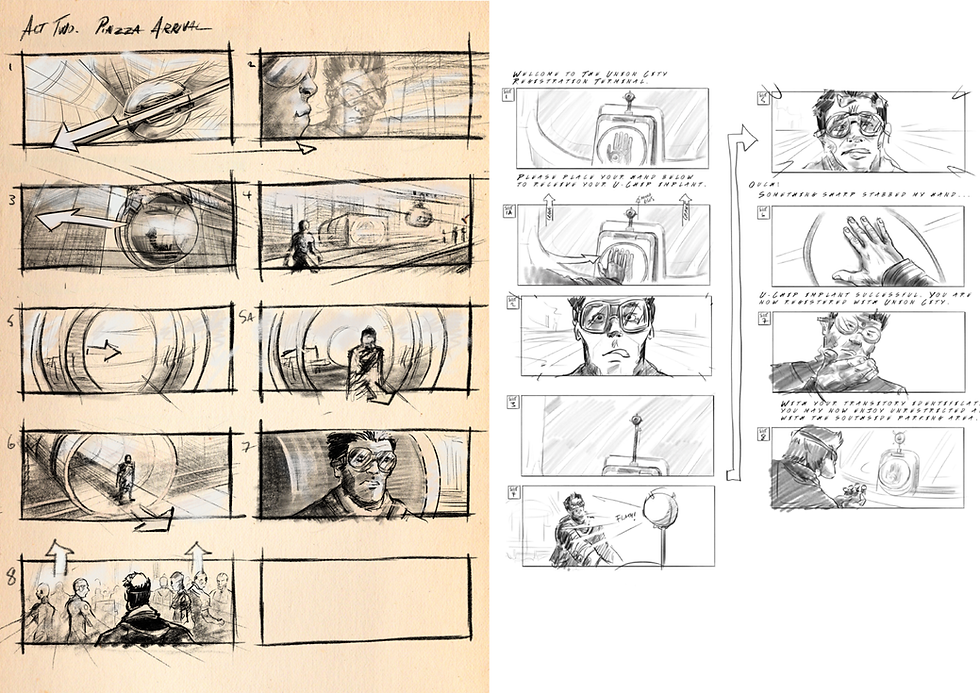

Storyboards and Animatics:

One of the first things I was provided with, along with the script and narrative design documents, were Storyboards and Animatics for some of the key sequences of the story.

These are invaluable tools when it comes to providing Direction and Intention, as you can determine in advance what the timing of a sequence or a given shot will be, before committing part of your budget to the creation of the necessary assets.

In the case of the Opening Credit sequence, the final result did not fall too far from where the animatic was headed and all elements (anticipation, crescendo, climax, etc) were nearly the same.

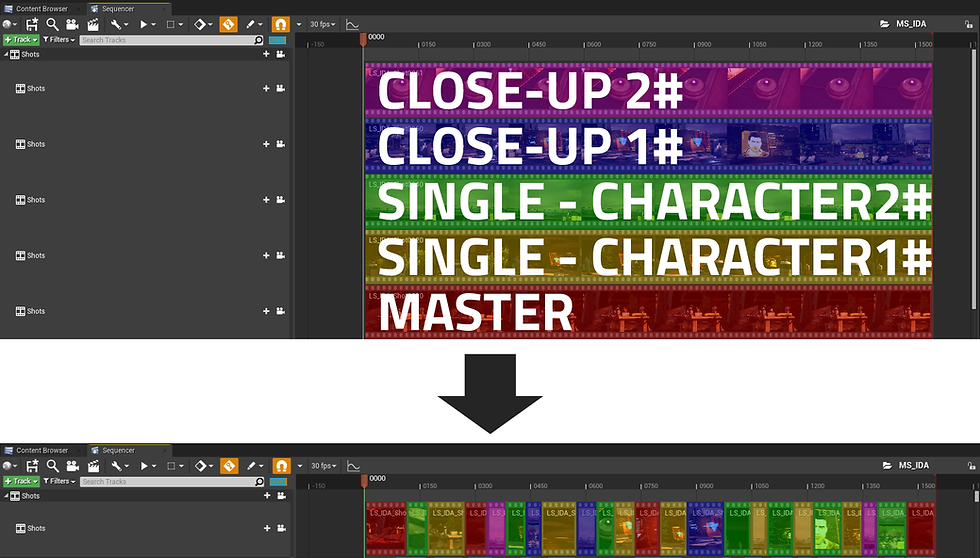

Cameras Blockout and Editing:

Given the large amount of dialogue scenes that we had to cover, we have opted for an editing workflow very much similar to that of filmmaking.

Rather than creating each shot from scratch, adding animation data and trying to match the start and finish of the previous/following shot, we have instead created a single, continuous animation sequence using place-holder data, such as in game walk cycle, idles and various character’s gestures.

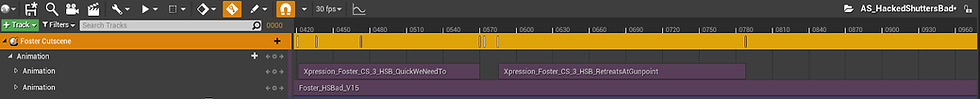

The resulting animation sequence was then shared across all shots (usually a Master shot and one Single shot for each character in a few variants (Mid-shot, Wide-shot, Close-up, etc), which would in turn be aligned on the timeline, ready to be cut and edited.

If at any point the animation sequence needed to be updated, for example modifying one of the character's position, the artist would only need to do it once and the tweak would be reflected in all other shots sharing the same animation clip.

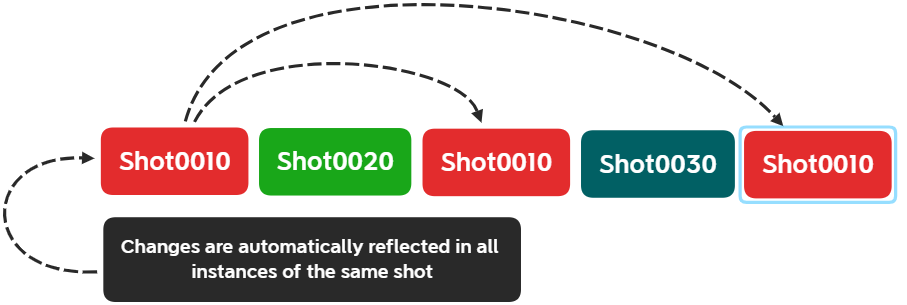

The same applies to camera tweaks. Modifying the camera angle in a Single shot, would only require one iteration as all other "fragments" of the same shot on the master timeline is in fact an instance (rather than being a standalone copy), and changes are carried over.

This approach gives you more control over a sequence pacing, prevents continuity issues and also allows for non-linear editing.

Once we had achieved a solid edit, the sequence was ready for the various departments to create content around it.

Animation: XSens Motion Capture

It wouldn’t have been possible to create the amount of animation data needed if we had not used XSens Motion Capture technology as a starting point.

Once calibrated, the Xsens Suit allowed us to capture a performance almost everywhere we wanted (often in our office in York) and without needing too much notice before one of us was able to jump in and capture a bunch of animations.

This proved to be extremely convenient when the lock-down took place, as one of our artists got to take the suit home and was able to provide the rest of the team with what was needed to keep the cinematic production rolling.

The generated data, once processed by the XSens software, was retargeted to a generic humanoid skeleton and sent to animators for cleaning, before being implemented on timelines.

Using Motion Builder and Maya, the various performances were blended together, cleaned and extended to create the desired result.

Facial Expressions and Lip Sync.

Characters expressions and Lip Sync were not sharing the same animation clips but each of them had instead a separate clip that was added on top of the character body data.

This means that Voice Over lines and Facial Expressions were not directly tied up together, but we were instead able to offset or swap them accordingly to our needs.

- Expressions:

Expressions were created using “poses”. A pose contains facial bones that are used to simulate the face muscles, (forehead, eyebrows, etc) and are posed in a way so that they give a determined expression (anger, surprise, fear, pain, etc) to the character they are applied to.

A pose can be made of a combination of several poses and an artist can create potentially countless variations, according to the need of the shot.

Once happy with the result, the Expression is saved as an animation clip and added to the timeline.

All expressions, except those for Foster (the main character) were shareable across all other characters of the game.

- Lip Sync:

Lip Sync animation data was generated using Speech Graphics technology. The software analyses an audio input (such as a Voice Over audio file) and provides a synchronised facial animation file.

The data generated was then implemented into Unreal through a proprietary Dialogue Tool developed internally which also generated the the final dialogue clip containing the VO audio file and related caption in 7 different languages and the Lip Sync animation data.

The LipSync and Expressions clips were then added to the timeline, on top of the body animation data, and shifted around accordingly.

This pipeline proved to be effective and sustainable, considering that dialogue iterations continued up to 1 month from the final deadline.

Vfx, Audio and Soundtrack

These three departments might look like they're not quite active as Animation and Cameras departments at the beginning of the Cinematic Production process, but that is only in appearance.

Audio artists, Vfx artists and the Soundtrack Composer spend most of their time in different areas of the engine, if not outside at all, while creating their content.

Visual Forest (Vfx) and PitStop (Audio) worked quietly (and hard) for quite some time, to then populate the timelines with their contribution near the end of production.

This is because, even though they know exactly which content they need to generate, they require the timing of a sequence to be locked before they can trigger their effects or audio clips on the timeline.

It’s no coincidence that a sequence finally comes to life only after Audio and Music (OST by Alistair Kerley) makes its appearance on screen (or speakers).

In conclusion...

The adoption of a Collaborative Workflow from the very beginning was key to maintaining the Cinematic team running, allowing each department involved to collaborate independently to the sequences.

Keeping Body, Facial Expression and Lip Sync data separately allowed for great flexibility and re-usability, reducing the costs and impact on the budget.

At the same way, instancing shots on the Master Timeline, as opposed to copy-pasting the same shot every time it’s needed, reduced iteration time and mitigated continuity issues when tweaking camera angles or character positioning.

I encourage you to either train/educate your existing Cinematic Team (including Cinematic Designers) on filmmaking practices and language, or hire professionals with film background and make them part of your team as early as possible.

My name is Matteo Grossi, I am a freelance Lead Cinematic Artist.

If you need help setting up a Cinematic Pipeline or Lead the Cinematic Team for your next project, feel free to get in touch on Linkedin or reach me at grossimatte@gmail.com

Comments